AI: Non-technical Crash Course

"You will never be good in AI. 🚫 And that's okay."

This is a response to this LinkedIn post.

In a sea of clueless bullshitters LinkedIn AI Influencers, the above post stood out to me as I scrolled with my morning coffee. It was to the point, grounded, and genuine. The theme here is knowledge that is useful and easy to leverage - let’s roll with that.

I woke up at 4:45 and will start work at 5:30 so I need to finish this post by then. Let’s make it short, fast, actionable, and hopefully useful to at least one of you. We’ll walk through a simplified version of a setup I recommend to my non-engineer friends.

What Will This Get You?

Use case: “Good morning. What did I miss?” (Lots of red but its all accurate/useful).

Use Case: “What do you remember from the last two days?”

Use Case: someone sends Platform a message on slack about an outage. This:

Picks it up from Slack automatically and dispatches a Boomerang job.

Checks Sentry, Prometheus, GCP, K8S (kubectl), and LogRocket.

Checks recent memories, Google Drive, Linear, JIRA, Calendar, etc.

Replies to that person in Slack, let you know, and if necessary, creates tickets.

The tickets a setup like this creates are GOOD. Like, actual acceptance criteria an Engineer can pick up and start immediately good. Similar tools govern my daily priorities, what I need to do, help me communicate via email, and other things.

What Are We Doing?

3 personalized contexts, an MCP server, and an A2A implementation Are All You Need. After 15 years of ML research (and applied ML - I do things! sometimes), I understand things well enough to explain them in simple and concise terms.

These 3 things (queue BuzzFeed clickbait) are the AI “80” of 80/20 for non-engineers:

CONTROL YOUR DATA. A synced OneDrive/iCloud/GDrive folder is a good start.

Check HF Leaderboards for models relevant to YOUR use cases every 2 weeks.

Create stateful* contexts for YOUR use cases. I’ll show you how to do this below.

*- Stateful here means with persistent memory and dedicated embedding back-ends.

Let’s Do The Thing

Install Visual Studio Code (ab: VSC). Click on the icon that looks like a lobotomized Rubik’s Cube in the top left sidebar and install the Cline and Roo Code extensions:

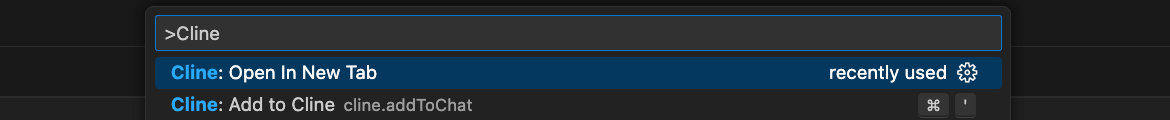

Get API keys from OpenAI, Anthropic, and Tavily (web search that doesn’t suck). In the top middle of VSC (Visual Studio Code), there is a text box. We’re going to click on that text box. Then we will move our hands over to our keyboard, type in “>Cline”, and press Enter or put our hands back on the mouse to click “Cline: Open In New Tab”

A wild UI component appears. Don’t panic! We got this. Click on the box that looks like the three stacked pizzas you ate last Saturday before passing out (bottom left):

Okay, my coffee is slowly kicking in so we’re going to go a bit faster now. Go to the marketplace tab on the left and install the Tavily MCP server plugin (pictured below).

Cline will walk you through the entire process, test, and if necessary troubleshoot + fix the MCP server installation. All you have to do is type in (presumably) English.

We’re AI wizards now, Harry. We now have a place where we can use any model with robust web search functionality (please don’t go on LinkedIn and call this an Agent).

Cline In A Nutshell

Think of Cline as a middle manager. It’s good at orchestrating MCP servers and Roo Code, but it doesn’t actually do any work except Rules and Workflows - you can find those by clicking on what the US justice system should’ve been. Bottom left of Cline:

You may have noticed the annoying yellow highlight that both lets us know we are not using an Apple product and sums up the first thing life crushes in adulthood: “Plan”

Cline is good at freeform planning. Ask it to plan something, then prompt it to update that plan. Like the middle manager that it is, Cline is good for doing deep research which we can then ask it to store in our local Mem0 MCP server for Roo Code.

That brings us to the limit of usefulness that is Cline. To reiterate:

Configuring and troubleshooting MCP Servers

Rules and Workflows. Read more about those.

Deep research and local memory management

Roo Code: The Nut In The Shell

Cline and Roo used to be the same person (Roo is a branch of Cline). Then they had a fight, and now they’re frienemies. They still have similar mannerisms. Let’s open the settings for both so we can synchronize our Cline and Roo Code MCP servers:

Cline: go to Remote Servers (same place as Marketplace above) and click “Edit Configuration” at the bottom. This opens up the Cline settings JSON.

Roo Code: the three Pizza boxes are located on the top right just because. We click that, then scroll down to the bottom and click “Edit Global MCP”.

A visual depiction of tech debt wanting to be business value when it grows up:

The format is very similar, but we will need to make a few small changes:

Or delegate to one of the 3-8 instances of OpenHands you surely run in parallel.

The orchestrator mode in Roo Code is what we’re after because it can:

Have its own API and MCP settings (use C4Opus for the Orchestrator itself)

Automatically select between contexts we define (and is decent at it)

Each of those contexts have their own model, API, and MCP settings

They can run in parallel with shared MCP state and memory back-ends

Speaking of those contexts, let me give you a simple example: Secretary

Intermediate example: DevOps

Through pragmatic use of allowed commands (aka NOT kubectl deploy) and local MCP servers, you will get pretty good mileage. I suggest setting up, at the very least:

File System

Google Calendar

Google Drive

Slack

A Note On Memory

The importance of local MCP memory (I personally prefer mem0) initialization and scheduled updates can not be understated. Do this with Cline. Example prompts:

"memorize the slack channel ID and name correlations, then check your memories and confirm that you remember them."

"memorize that the latest Linear engineering requests are added under and checked from the [REDACTED] linear project - make sure to read the project description for instructions and memorize those."

"look through the latest 20 messages in each slack channel you have access to, create concise channel purpose summaries, and store them alongside your existing slack channel memory mappings to increase your awareness of which slack channels you should look in and post to for specific topics"

Other Protips

LMs are dumb. Structure projects / *.MDs to mitigate that and tell ‘em to RTFM.

Use “brew tree” and forced tool calls to let LMs better navigate your projects:

Make sure your OpenHands instances each have a separate Mem0 MCP store.

Hotwire your OpenHands instances to plex via A2A (agent to agent).

Just like *.MD files, due to training data, LMs do well with Mermaid Diagrams.

Do not overkill the model used for a task. Larger models are not always better.

#1, by far: know when to use other model types (not LMs).

#2: Know when to use, or not use, thinking models.

#3: Know when to use fast SLMs or nano LMs.

Not all memory is created equal. Even for a sh*tty local only setup, you want to use a combination of Graph, Semantic, Episodic, and Embedding memory.

RAG sucks unless you need dynamic in-context chain of thought

Forced tool calls via something like Algolia are better 90% of the time.

Learn your MCPs like you learned your ABCs.

Example: if you use LMs for code without basics like Context7 …yeah.

Limit ‘em. 10-15 max per context and use RAG to assist with selection.

Good prompts are as important as they are model-specific.

A model-agnostic prompt library will make your life better.

Pre-initialized templates are underrated. (ie., you pre-populate the first 1-5 assistant : user message pairs with a focus on what the LM thinks it said)

Effective system prompt example:

ML is a great hammer but not everything is a nail. In general, LMs and RAG are a last resort. They are both slow, expensive, and highly prone to errors. People who are new to ML (aka most of the world) are in a honeymoon phase. Unless you’re an intern, you already know that a system’s observability should be proportional to its autonomy and a system’s reliability should be proportional to its parent ecosystem’s complexity.

In terms of “code paths to cover with unit tests”, ML is a f*cking nightmare.

Conclusion (aka Self-Promotion)

Did you think that today was the day you’d see a post on the internet without self-promotion? Look at the bright side: it’s not “hey bro I’ve got this sick report free(tm) all you need to do is comment on my post or DM me your email” — Sincerely, a “coach” (unemployed) who claims to have scaled startups from 0 to $10M+ / have F500 clients while attempting to sell some “program” for $10-$5000 on LinkedIn.

Unless you want to join our wonderful team over at Sully AI (I’m hiring and build our Platform/AI tools - unlike this 2023 technology, we actually use modern tooling) this should be around 60th-70th percentile in terms of AI tooling you can get for free.