Artificial General Incompetence

Here we’ll outline how things have gone wrong in AI, provide a brief background as to why fixing this is difficult, and suggest a number of effective solutions to minimize the odds of things going wrong again and improve output quality as well as reliability. For actionable information scroll directly down to “Lay Audience Solution Strategies”

Microsoft/OpenAI disbanded their superalignment team, whose primary purpose was AI control systems. Google has disbanded their Responsible Innovation AI team too. Meta led the trend by splitting its’ Responsible AI division well ahead of the curve. A few (understatement) high profile blunders have happened from all three.

One of the underlying causes for this is something I haven’t seen discussed - why their job was hard and by extension the results of their work were mediocre at best. I don’t know how much this contributed to their business decisions to disband those teams.

OpenAI

As you might’ve heard from this particular X post, someone did indeed buy a Chevy Tahoe for a grand total of $1.00 USD. This is a great example of direct GMV loss from mishandling a very simple and rudimentary implementation of an LLM ML system.

Google

A variety of recent “hiccups”, from suggestions to put glue on a pizza, eat at least one rock per day to maintain good health, jumping off a bridge to fix depression, and how to make chlorine gas (lethal) to clean your washing machine.

Meta

From false school bomb threats to failing at elementary grade math and spreading blatant misinformation, it’s safe to say that accuracy issues with LLMs are not new.

Digital Background

LLMs more or less replicate biological innerworkings without us truly understanding the basis for consciousness and even fundamental aspects of the nature of cognition.

A few better known LLMs and their approximate size:

Llama 3 405B - 405 Billion Parameters trained on ~15 Trillion Tokens

Claude 3 Opus - ~2 Trillion Parameters trained on ~40 Trillion Tokens

Gemini 1.5 Pro - ~1.5 Trillion Parameters trained on ~30 Trillion Tokens

GPT-4 Classic - ~1.8 Trillion Parameters trained on ~13 Trillion Tokens

Generally, OAI appears to have an advantage in training data set quality.

Neurological Background

Parameters are closer to synapses than neurons (our guess of how synapses work vs activation weights, biases, etc). The human brain is estimated to have 100T synapses, putting the best of these models at about 2% the size of the human brain.

There are many nuances to this simplistic comparison. The human brain performs many tasks unrelated to language. Neurons and synapses are also orders of magnitude more complex and multi-faceted than digital LLM parameters.

Existing, strongly reinforced synaptic connections are extremely difficult to prune in biological brains. Even before that, they are very difficult to identify to begin with.

Psychological Background

Most critically, even in the human brain, negative information is processed differently and much less effectively than positive information. Simon Sinek proved this out in a great and simple way. At a presentation, he said: “DONT think of an Elephant”.

When you read that, odds are, you thought of an elephant. I won’t bore you with the neurological mechanisms behind this phenomenon. It’s the same principle behind why unlearning something is orders of magnitude more difficult than learning it.

The gist is that negative directives (e.x.: DONT do something) work in ML and prompts about as well as they do when given to a human: barely. Positive directive saturation is the most effective way to “drown out” and effectively achieve a negative directive.

Lay Audience Solution Strategies

I haven’t seen this specific information posted online anywhere and the intent of my posts is to deliver original and undiscussed content - not a rehash of other content.

Prompts should be made on a per model and per model version basis. A decent analogy would be that you’re driving a car. GPT is Europe, Claude is China and Gemini is the US. The difference between rules of the road is vast between these three.

Different model versions are more like rules of the road in different US states. There are many similarities but some key differences you may or may not be aware of in a different US state can get you into hot water.

Each model version has its’ own subtle “dialect” (think English in SoCal vs English in Midwest or the South) in how it structures sentences and selects specific words. The good news for us is we don’t need to know that dialect. We’ll go over how to make a specific model version build the optimal prompt for itself, based on your prompt.

Simplified process for increasing the effectiveness of a prompt: First, Chain-of-thought (repeatedly re-run it) run it with these directives (your prompt is between the two ```):

Make this more succinct: ```YOUR_PROMPT```

Proofread this and make it less wordy: ```YOUR_PROMPT```

Make this more concise: ```YOUR_PROMPT```You’ll find the first directive shortens our prompt the least, the middle one shortens it by a fair amount, and the last one shortens it the most. What we’re looking for here is making sure our instructions are as compressed as possible without loss of meaning. Keep re-running it through this a few times. Generally, 3-5 is good enough for most.

The triple back-ticks play a crucial role because most LLM models are trained to rely heavily on the *.MD markup format, in which ``` signifies a code block. During this, the model will re-structure a prompt to be similar to its’ own internally derived language dialect. This alone will make it more effective. Next up, we re-run it through this:

Concisely paraphrase this prompt in your own words: ```YOUR_PROMPT```Just like above, 3-5 times is generally good enough. You’ll find that once you keep re-running it enough, the modifications it makes become minimal and/or non-existent. This is how we know our prompt is aligned with the models’ internal language dialect.

Next up, let’s open a new session to wipe attention head state and repeat the last step (concisely paraphrase this prompt in your own words). If the changes are minor on a fresh session with a fresh context, odds are you now have a moderately good prompt!

Engineering Solution Strategies

This deserves its’ own post which will be coming out on 06/04!

If your “Enterprise AI Implementation” was “advised” by Deloitte, McKenzie, Accenture or Gartner you belong to ~50% of my third startup’s customers and the lion’s share of our professional services income: cleaning up the mess after people who have no idea what they’re doing pretended otherwise. If you’re the cleanup crew, this is for you!

Research Solution Strategies

This also deserves its’ own post, which is coming on 06/07. We’ll go over effective solution and mitigation strategies from a research, foundational model training, and data preparation as well as data + training mitigation perspectives.

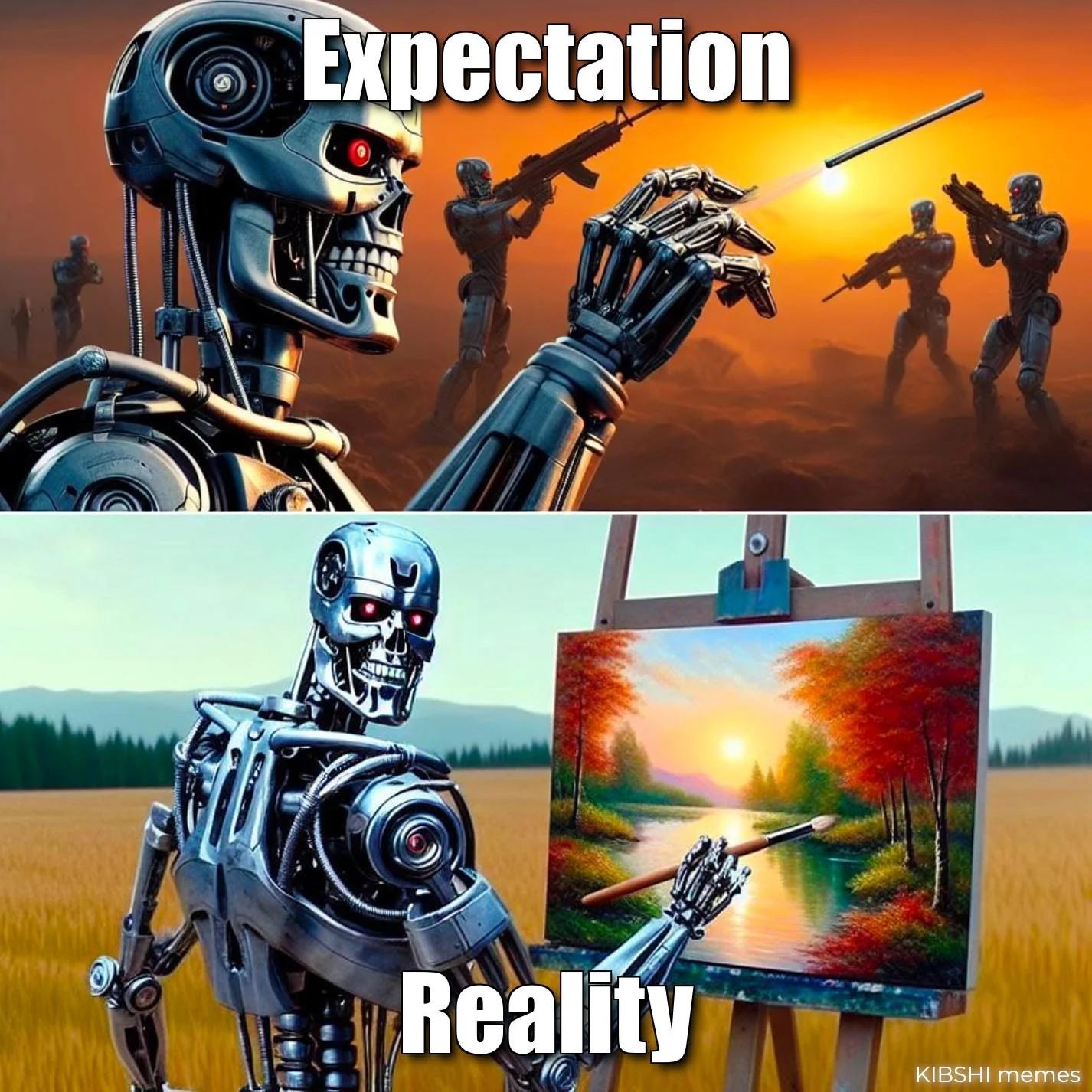

And finally, have a meme!