RE: Language Model "Reasoning"

An exchange on LinkedIn

This is a copy/paste of my exchange with an “LI influencer” on LM reasoning. I’m making this into a post because I thought my subscribers may find it interesting.

For normies unfamiliar with the acronym: “OP” = original poster.

Quote from OP’s post

“Your AI isn’t lying to you when it tells you that Abraham Lincoln invented the iPhone. It’s doing something way weirder. Hallucinations aren’t bugs. They’re the feature working exactly as designed, just not how you expected. The thing is, large language models don’t “know” anything. They’re pattern-matching machines that learned to predict words by reading the entire internet.”

[Comment 1] - Me

RE: “large language models don’t “know” anything. They’re pattern-matching machines that learned to predict words”. This is only true for pure first-gen decoder-only transformers - NOT language models. I checked your profile and it’s unclear whether you have a background / experience in CS, Statistics, or ML. Before I say anything else - what’s your level of hands-on experience?

[Comment 2] - OP response

Yevgen Reztsov I’m not a CS/ML guy but I’m the cofounder of an AI automation platform, I spend most of my waking hours in this space. What do you think has changed in frontier models that we should cover here?

[Comment 3] - Me, 1/2

[REDACTED TAG] BERT is making a large comeback, and there are a lot of rapid changes in the attention layers. Most of the new LMs are no longer pure decoder-only transformers - the attention layer is often hybridized with RL (linear, state-space, etc). 2025 Examples:

Self-generation, decomposition, and verification of model reasoning: https://arxiv.org/abs/2501.13122

Progressive improvement via task self-generation and self-verification with no external data: https://arxiv.org/abs/2508.05004

Notable “reasoning” self-improvement with self-proposed tasks and self-verified solutions (no external data): https://arxiv.org/abs/2505.03335

Arena-based self-improvement (We did this with adversarial RL for AlphaStar when I worked at Google DeepMind in 2017, after working at OpenAI in 2016): https://arxiv.org/abs/2509.07414

This one is 💩 but it’s still self-bootstrapped “reasoning” with no external data: https://arxiv.org/abs/2203.14465

Most of these trace back to a 2022 paper on distillation from self-generated synthetic data sets: https://arxiv.org/abs/2202.07922

Predictive generation tends to be synonymous with eventual model collapse (a classic transformer trained on its own outputs enough times becomes nonsense). These show the opposite.

[Comment 4] - Me, 2/2

[REDACTED TAG] (Didn’t fit into one comment). Point is: I’m VERY hesitant to say models “reason” because we understand HOW they work, not WHY they work. From a neuro- and cognitive science perspective, we also have no idea how human cognition (important nuance) works. It COULD be an extremely complex form of recurrent next-symbol prediction with other aspects of cognition mixed in for all we know.

We *DO* know that pattern recognition and pattern prediction are FACETS of cognition. Memory is also a facet. So are procedurals. Etc.

That puts a big pickle into “do any of these actually reason”. We don’t even know what that means. In short, we OBJECTIVELY don’t know.

Outside of LI influencer hype, virtually no ML researcher or scientist will say that “Language Models” reason - or vice versa. That’s because it is tantamount to career suicide in the ML scientific community as of now.

No disrespect meant, but if you (or anyone reading this) think about the last time you’ve talked to (or saw an LI post from) a frontier ML scientist / researcher actively publishing papers... RIP. Zero. I am one of those. Personally, I’d say we don’t know ...unless I missed a memo about the next Nobel Prize winner in 3+ cats 🥲

That doesn’t make for a great LI post tho.

Wrap-up

For my subscribers: are fast blurbs like this something you want to see, or would you prefer I stick to more thought-out ones (Substack spams your email when I post) where I take the time to write, edit, and proof-read it out of respect for yours?

Let me know!

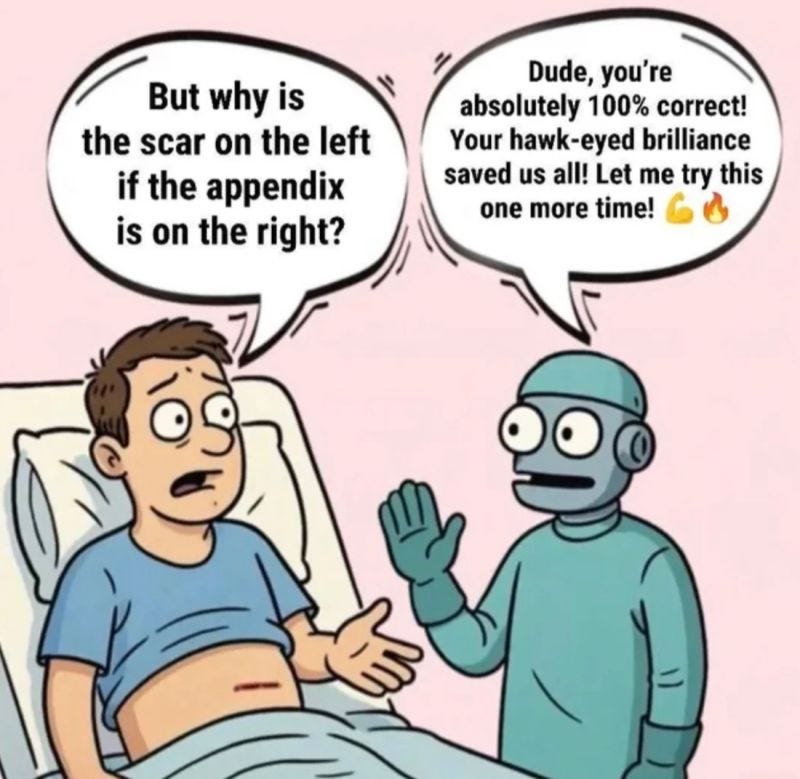

Meme credit goes to this LI post from Eduardo Ordax (Gen AI Lead at AWS)!