ML: A Networking Perspective, Pt2/X

Part 2! This series is designed for SWEs. No DevOps experience required.

This is an article based on feedback from the previous one. It covers what this series enables for engineers and users in a lay-audience-friendly and use-case-based way.

TLDR even if all you do is OpenAI API calls, your users’ TTFT (time to first token) decreases by 395-870+ms, your vendor footprint also becomes smaller, you save money, have better consistency, lower downtime, and your developers love you.

This bridges the gap between companies wanting cross-cloud benefits and those with a Platform team and budget to deploy and maintain K8s clusters in every cloud, with vertically / horizontally scaled Istio sidecars, private SSL certificates, VPC peering, and an Envoy service mesh. Despite its transitional nature, this approach is robust, resilient, maintainable, performant, and scalable. It can and has served 1M+ cross-VPC RPS.

Previous posts: “ML: A Networking Perspective, Pt1/X”, “ML: A Hardware Perspective”.

Benefits (Attempt #2)

1. User Experience

Everything we do is for our users. Seamless UIUX requires sane response times:

20-100ms: Feels instant, highly responsive - this is the ideal experience.

100-300ms: Noticeable, but still considered near-instant and seamless.

>300ms: Noticeable delay, mild but acceptable UX degradation.

>500ms: Perceived lag, sharp decline in UX, and user frustration.

>1sec: Significant UX degradation and likely user abandonment.

Infra is necessary to build things that are fast. Abstracting that infra is necessary for enabling engineers to build those things fast. On the front-end, that looks like this:

Analysis paralysis caused by free-form text is the silent killer of user experience. A generic, easy-bake recipe for reducing user cognitive load and improving UIUX is:

Superior UIUX for ML products often involves moving away from free-form, text-only interactions as quickly as possible (e.g., Lovelace). This requires a data-driven UI player.

The backend sends a JSON payload representing your users' UI layout (ex: open code canvas, file workspace, query engine, document uploads or downloads, etc.). Like this:

2. Developer Experience

The ideal application developer experience involves at least 70% - ideally over 90% - of what they write being business logic. Less boilerplate equals more customer value.

This series will provide a comprehensive guide for a single engineer to empower your organization with the following advantages in 1-3 days, troubleshooting included:

Front-End Development

Provide a simple way to use CSR, SSR, SSG, ISR, and SSSR with minimal boilerplate.

Remove caching, chunking, compression, and asset optimization from app logic.

Provide a centralized GUI for testing and optimizing front-end performance.

Remove authorization and authentication from app logic by centralizing it.

Reliable global <15ms web, mobile, and native front-end performance.

Back-End Development

Faster TTFT and TPS (tokens per second). OpenAI example:

Public API: 600-800ms TTFT / 600ms-1.5sec total latency. Not great UX.

Azure API: 200-300ms TTFT / 300-700ms total latency. Much better UX.

Azure also offers enterprise security, compliance, and data residency.

Services in AWS and GCP will be able to take full advantage of this.

Unlike public APIs, API downtime in Azure is virtually unheard of.

Significantly faster embedding queries.

AlloyDB takes 10-20ms to query against millions of vectors.

Supabase pgvector takes 30-80ms or more at similar scale.

Inter-VPC vs public transit shaves off another 85-200ms+.

This makes your RAG queries 95-270ms faster.

Your models query their embeddings locally.

The above takes us from frustrating response times to a great user experience. Every company I've done this for had an immediate increase in user satisfaction.

Reduce your vendor footprint and allow application developers to call any service or model using unified internal authorization, instead of managing vendor API keys, rotations, etc., on a per-application basis - the definition of insanity.

Minimize toil by centralizing key management and model / API vendor updates.

Improve security by never exposing ALBs, NLBs, or services for external access.

Explicit and centralized outbound access control and logging.

Simplify end-user and organization billing and usage tracking by unifying it.

Centralize vendor failover and adherence to your usage / throttling limits.

There are many other advantages, like enabling access to serverless models on all major clouds (Gemini Flash, for example, has uses in virtually every LLM workflow), but this article's goal is to cover everyday benefits for users and application developers.

A Robust Full-Stack Interface

Enabling your backend and frontend applications to call the same service endpoints will make your full-stack engineers happy and they will say nice things about you.

This also lets you to intercept and centrally authorize any vendor or endpoint. Making a call to Together AI, OpenRouter, Weights & Biases, ScrapingBee, etc.? No problem!

Your service’s SDK called what it thinks is https://scrapingbee/foobar, and your internal DNS routed it 30-100ms+ faster via the proxies and a global backbone while injecting the proper API keys without your back-end service ever knowing about it.

These proxies support DB connections and other cool stuff - they cover HTTP/S/2/3 (QUIC), TCP and UDP, TCP and UDP Streams, GRE, IPIP, IPSec, gRPC and WebSockets.

3. Performance

Fast, predictable infrastructure performance directly improves user satisfaction and reduces infrastructure costs. Here are some real-world benchmarks that show this:

800Mbps+ per-node throughput on streams and large RQs.

1200-1600 RPS with 3-4ms internal traffic between clouds.

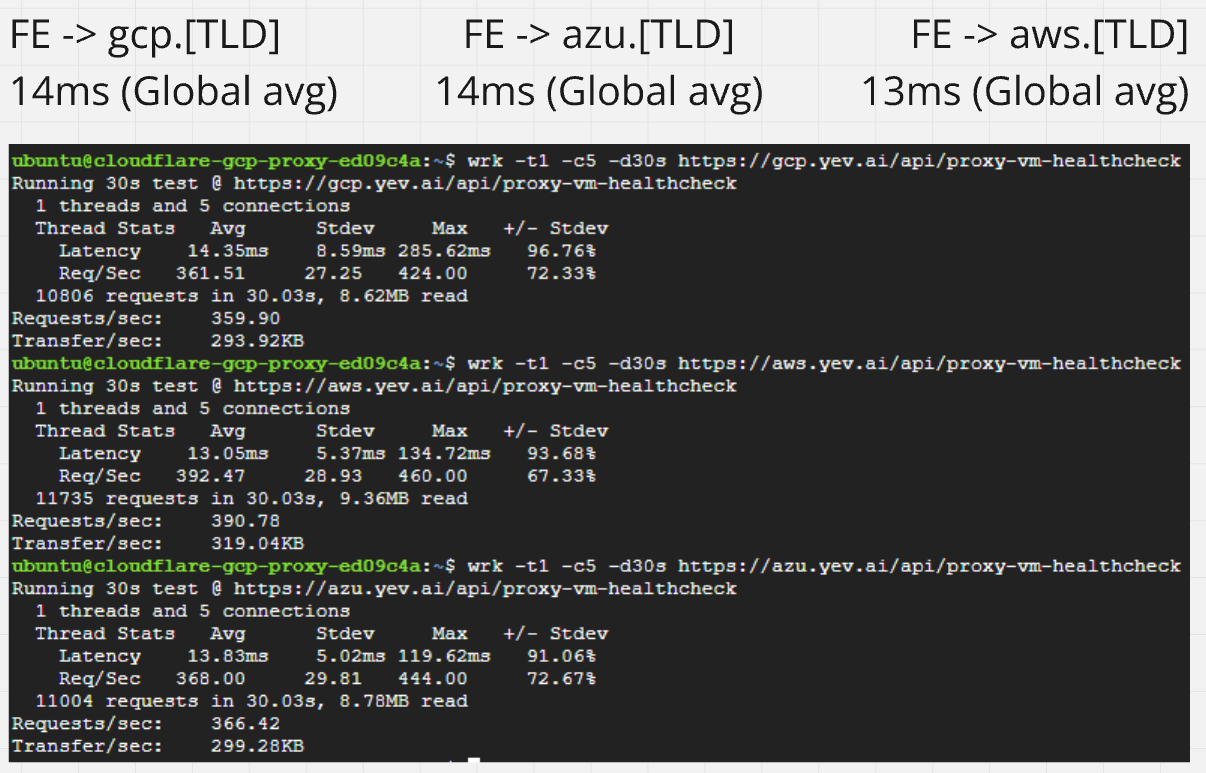

350-400 RPS with 13-14ms global front-end performance.

Inter-VPC performance (TLDR, 3-4ms):

This is made possible by physical colocation of the proxy VMs, although it uses public network transit (the internet). I ran these tests for 48 hours and for 11 minutes of that, congestion increased latency to 9-14 ms with zero packet loss. Compared to the VPC peering alternative of $2700-3500 per month, this also gives us higher burst capacity.

Front-end performance (TLDR: 13-14ms):

These were non-cached requests to an NGINX server on the nodes (using more CPU than proxy forwarding), during which none of them exceeded 25% CPU or 630 MB RAM. Speaking of our nodes, this level of throughput costs all of $21 per month:

This also gets us universally accelerated outbound API requests which are normally either unavailable out of the box altogether or cost insane amounts in each cloud.

We will add redundancy and fault tolerance while also making it 60-90% cheaper with auto-healing scaling groups composed of spot instances. This step is also necessary to verify that our design is robust enough for production usage. People talk about resilience and durability, but until you run entirely on spot instances, you have neither.

4. Cost

This setup pays for itself by reducing cloud costs and operational complexity. By the time we’re done, you’ll be running all of it for under $100/mo across all 3 clouds.

Engineering Overhead Savings

Application development teams move faster because they can self-service infra.

No per-service endpoint environment variables and update redeployments.

Unified request tracing drastically simplifies debugging of production issues.

Unified request logging removes redundant middleware from our services.

No more per-cloud and per-account SSL certificate rotation (aka Platform KTLO).

This also saves us from TLS/SSL termination overhead in all our services.

Platform is no longer a dependency or blocker and can focus on new features.

Compute Overhead Savings

Per-account/CDN/ALB AWS Global Accelerators ($18/mo each)

Per-account/CDN Azure Front Doors ($35/mo standard, $330/mo premium)

Cloudflare performance is equivalent to premium Azure Front Doors.

Per-VPC AWS private DNS ($0.50/mo per zone + $0.40 per 1M requests)

Per-VPC GCP private DNS ($0.20/mo per zone + $0.40 per 1M queries)

GCP NAT Gateway costs (this can get insanely expensive very quickly)

Per-VPC Azure private DNS ( $0.50/mo per zone + $0.40 per 1M queries)

Azure NAT Gateway costs ($32/mo each)

What’s Next

In the next article, Pt3/X, we'll set up a proxy in each cloud that responds to a health-check endpoint and connect it to our public domain via Cloudflared. This entire series will be done in a secure zero trust way that never makes our VMs externally accessible by using neat techniques like UDP hole-punching, reverse tunnels, and relay networks.

After that, the course schedule will be:

Pt4: Add direct inter-VPC mesh communication to proxies.

Pt5: Set up automagic DNS routing to proxies in each VPC.

Pt6: Optimize egress routing through Cloudflare's backbone.

Pt7: Introduce fault tolerance with spot instance groups.

Pt8: Expand our mesh a micro-cloud like Lambda Labs.

After that, we’ll do an "ML: A Software Perspective" series, where we write middleware and provide examples using popular frameworks like PyTorch and TensorFlow. We'll also include tools like Autogen, Semantic Kernel, mem0, Composio, LangGraph, AG2, CrewAI, Wetrocloud, Vercel SDK, LlamaIndex, and other easy-bakes for the "AI" crowd.

Let’s make sure the below depicts our competitors’ customers and APIs - not ours.